Possible extra topics

Contents

Possible extra topics¶

One of the rubric items for the course project is to include something “extra” that wasn’t covered in Math 10. Here are a few possibilities. It’s even better if you find your own extra topic; it can be anything in Python that interests you.

More possibilities will be added as I think of them.

Different Python libraries¶

If you want to use a Python library that isn’t by default installed in Deepnote, you can install it yourself within Deepnote, using a line of code like the following, which installs the vega_datasets library. Notice the exclamation point at the beginning (which probably won’t appear in the documentation you find for the library).

!pip install vega_datasets

Kaggle¶

Browse Kaggle. Go to a competition or dataset you find interesting, and then click on the Code tab near the top. You will reach a page like this one about Fashion-MNIST. You can browse through the Kaggle notebooks for ideas.

pandas groupby¶

A very useful tool in pandas, which unfortunately we did not cover this quarter, is groupby, which gives a way to break a DataFrame up into different groups. Here are examples from the pandas user guide.

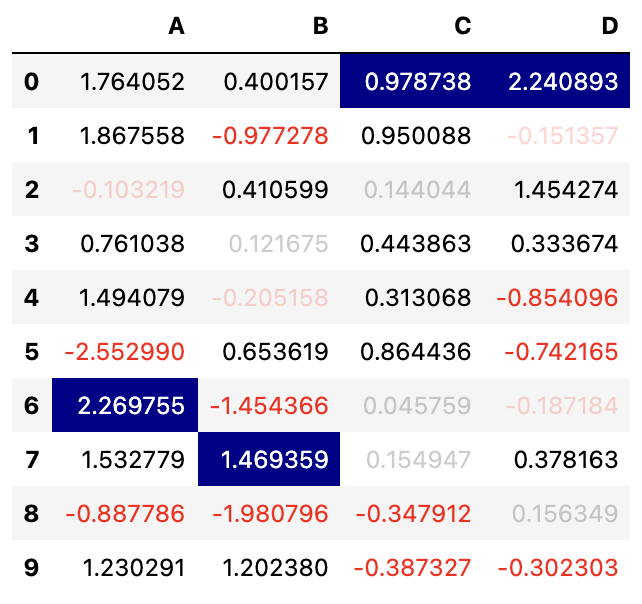

pandas styler¶

See these examples in the pandas documentation. This provides a way to highlight certain cells in a pandas DataFrame, and is good practice using apply and applymap.

Random forests in scikit-learn¶

Random forests. This is maybe the machine learning method I see most often in Kaggle competitions.

Clustering¶

All the Machine Learning we did in Math 10 during Winter 2022 was supervised learning. One of the main examples of unsupervised learning is clustering, where the model divides the data into different clusters.

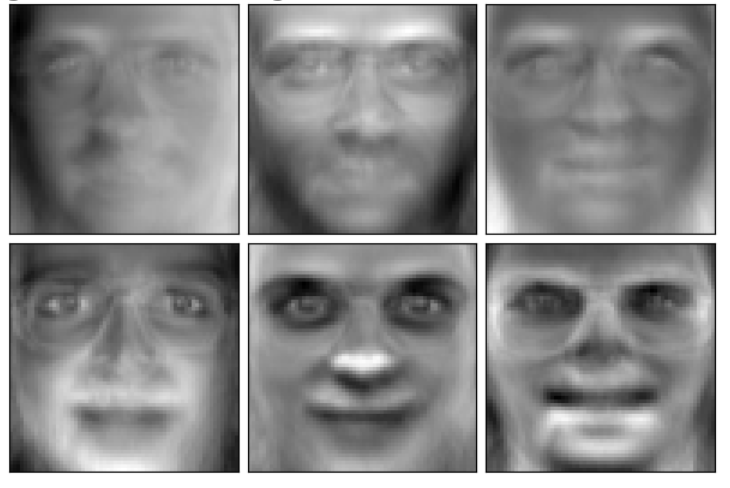

Principal Component Analysis in scikit-learn¶

Principal component analysis. Another type of unsupervised learning is dimensionality reduction. Principal Component Analysis (PCA) is a famous example, and it involves advanced linear algebra. The above image shows a visual example of the result of PCA.

PyTorch extras¶

Try some other optimizers (especially

Adam) or loss functions, or go into more details about the ones we used. (What ismomentumin stochastic gradient descent? Why is it useful? How does Softmax work? How does Log Likelihood work?)Instead of a fully connected neural network, like what we did in class, try to make a convolutional neural network.

More Machine Learning options¶

I don’t know much about these, but some very popular tools in Machine learning include the following. (Just getting some of them to run in Deepnote could already be impressive; I haven’t tried, so I don’t know how straighforward that is.)

Other libraries¶

Here are a few other libraries that you might find interesting. (Most of these are already installed in Deepnote.)

sympy for symbolic computation, like what you did in Math 9 using Mathematica.

Pillow for image processing.

re for advanced string methods using regular expressions.

Seaborn and Plotly. We introduced these plotting libraries briefly together with Altair early in Winter 2022. Their syntax is similar to Altair.

Keras The main rival to PyTorch for neural networks.