Applying Machine Learning techniques to the Fashion MNIST data set

Contents

Applying Machine Learning techniques to the Fashion MNIST data set#

Author: Sara-Grace Lien

Email: sjlien@uci.edu or saragracelien@gmail.com

Course Project, UC Irvine, Math 10, F22

Introduction#

For this project, I will be analysing the FashionMNIST data set and using machine learning models to classify the item to its respective label. I will analyse the results using plotting tools like Altair.

Exploring and loading the data#

In this portion of the project, I will use Pandas to explore the data.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

df_train = pd.read_csv('fashion-mnist_train.csv')

df_test = pd.read_csv('fashion-mnist_test.csv')

df_test

| label | pixel1 | pixel2 | pixel3 | pixel4 | pixel5 | pixel6 | pixel7 | pixel8 | pixel9 | ... | pixel775 | pixel776 | pixel777 | pixel778 | pixel779 | pixel780 | pixel781 | pixel782 | pixel783 | pixel784 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | 8 | ... | 103 | 87 | 56 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 34 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 53 | 99 | ... | 0 | 0 | 0 | 0 | 63 | 53 | 31 | 0 | 0 | 0 |

| 3 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 137 | 126 | 140 | 0 | 133 | 224 | 222 | 56 | 0 | 0 |

| 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 9995 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 32 | 23 | 14 | 20 | 0 | 0 | 1 | 0 | 0 | 0 |

| 9996 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 2 | 52 | 23 | 28 | 0 | 0 | 0 |

| 9997 | 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 175 | 172 | 172 | 182 | 199 | 222 | 42 | 0 | 1 | 0 |

| 9998 | 8 | 0 | 1 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 9999 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 140 | 119 | ... | 111 | 95 | 75 | 44 | 1 | 0 | 0 | 0 | 0 | 0 |

10000 rows × 785 columns

df_train

| label | pixel1 | pixel2 | pixel3 | pixel4 | pixel5 | pixel6 | pixel7 | pixel8 | pixel9 | ... | pixel775 | pixel776 | pixel777 | pixel778 | pixel779 | pixel780 | pixel781 | pixel782 | pixel783 | pixel784 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | ... | 0 | 0 | 0 | 30 | 43 | 0 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | ... | 3 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 59995 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 59996 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 73 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 59997 | 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 160 | 162 | 163 | 135 | 94 | 0 | 0 | 0 | 0 | 0 |

| 59998 | 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 59999 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

60000 rows × 785 columns

Checking or any empty values using .isna()#

Because the data i downloaded came in 2 different datasets, I will be analyzing both just in case.

# looking for any NA

df_train.isna().any().sum()

#we use .sum() to find how many NA values there are.

0

df_test.isna().any(axis=0).sum()

0

We can conclude that there are no empty values in the data set.

#type of data

type(df_test)

pandas.core.frame.DataFrame

type(df_train)

pandas.core.frame.DataFrame

df_test.columns

Index(['label', 'pixel1', 'pixel2', 'pixel3', 'pixel4', 'pixel5', 'pixel6',

'pixel7', 'pixel8', 'pixel9',

...

'pixel775', 'pixel776', 'pixel777', 'pixel778', 'pixel779', 'pixel780',

'pixel781', 'pixel782', 'pixel783', 'pixel784'],

dtype='object', length=785)

df_train.columns

Index(['label', 'pixel1', 'pixel2', 'pixel3', 'pixel4', 'pixel5', 'pixel6',

'pixel7', 'pixel8', 'pixel9',

...

'pixel775', 'pixel776', 'pixel777', 'pixel778', 'pixel779', 'pixel780',

'pixel781', 'pixel782', 'pixel783', 'pixel784'],

dtype='object', length=785)

df_train.dtypes

label int64

pixel1 int64

pixel2 int64

pixel3 int64

pixel4 int64

...

pixel780 int64

pixel781 int64

pixel782 int64

pixel783 int64

pixel784 int64

Length: 785, dtype: object

df_test.dtypes

label int64

pixel1 int64

pixel2 int64

pixel3 int64

pixel4 int64

...

pixel780 int64

pixel781 int64

pixel782 int64

pixel783 int64

pixel784 int64

Length: 785, dtype: object

df_train.shape

(60000, 785)

df_test.shape

(10000, 785)

df_train['label']

0 2

1 9

2 6

3 0

4 3

..

59995 9

59996 1

59997 8

59998 8

59999 7

Name: label, Length: 60000, dtype: int64

print(df_train.groupby(['label']).size())

label

0 6000

1 6000

2 6000

3 6000

4 6000

5 6000

6 6000

7 6000

8 6000

9 6000

dtype: int64

df_test['label']

0 0

1 1

2 2

3 2

4 3

..

9995 0

9996 6

9997 8

9998 8

9999 1

Name: label, Length: 10000, dtype: int64

print(df_test.groupby(['label']).size())

label

0 1000

1 1000

2 1000

3 1000

4 1000

5 1000

6 1000

7 1000

8 1000

9 1000

dtype: int64

Conclusion I wanted to know how many images of each clothing item there are in the data set, so I used groupby to find the values. For both the test and train datasets, each piece of clothing has the same number of images. From looking at the dataframe, I found that the first column is the label, so later on, I would have to use that as the y value and the rest of the columns is the value of each pixel in the 28 by 28 image of each clothing.

There are no obvious differences in the data other than its size, which we need as training set.

Loading the data Since the data is already split into test and train sets, I will not be using the train_test_split function from sklearn. What we are doing below is normalizing the data so it is similar across both training and testing data. We started from 1 for images because index 0 is the label. so x_train is the training data for the images and y_train is the respective label for the images. This is the same for the test data. Instead of having the pixel data from 0 to 255, we want to be 0 to 1 so we normalize it by dividing the data by 255. This makes working with the data more cohesive.

train = np.array(df_train, dtype='float32')

test = np.array(df_test, dtype='float32')

x_train = train[:, 1:] / 255

y_train = train[:, 0]

x_test = test[:, 1:] / 255

y_test = test[:, 0]

x_train

array([[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]], dtype=float32)

y_train

array([2., 9., 6., ..., 8., 8., 7.], dtype=float32)

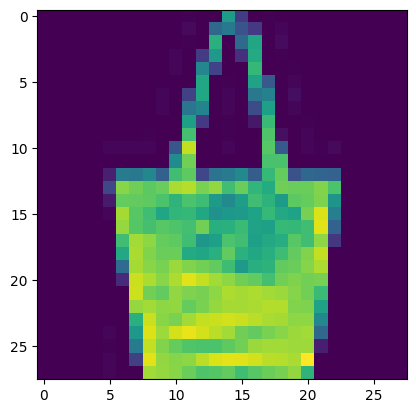

Here we are using matplotlib to display an example of what the an image looks like. I used a random index for this to showcase a random item.

image = x_train[9].reshape((28, 28)) # we can use any index

plt.imshow(image)

plt.show()

Reference: https://www.youtube.com/watch?v=N3oMKS1AfVI&ab_channel=MarkJay https://www.kaggle.com/code/kutubkapadia/fashion-mnist-with-tensorflow

Fitting a random forest classifier#

from sklearn.ensemble import RandomForestClassifier

rfc = RandomForestClassifier(n_estimators= 150, max_depth= 150, max_leaf_nodes= 150, random_state=1234)

rfc.fit(x_train,y_train)

RandomForestClassifier(max_depth=150, max_leaf_nodes=150, n_estimators=150,

random_state=1234)

rfc_pred = rfc.predict(x_train)

(rfc_pred == y_train).mean()

0.8559166666666667

rfc_pred2 = rfc.predict(x_test)

(rfc_pred2 == y_test).mean()

0.8465

Conclusions from RandomForestClassifier#

The RandomForestClassifier is a model that harnesses the power of multiple Decision Trees. It does not rely on the feature importance of a single decision tree and since the FashionMNIST dataset is a big dataset, it is better to have its randomized feature selection. This is why I used this instead of a DecisionTreeClassifier. The train and test set are really close which means the model is doing a good job at classifying the images. This is how we know its learning because the accuracy for the test set is close to the train set.

Why classification model?

Although the data labels are numbers, they correspond to respective classes. There are no independent and dependent variables in the data, so a regression model would not make sense to use.

Reference: https://www.analyticsvidhya.com/blog/2020/05/decision-tree-vs-random-forest-algorithm/#h2_6 https://www.simplilearn.com/regression-vs-classification-in-machine-learning-article

Using plotting tools to analyse the data#

Making a Confusion Matrix using altair#

import pandas as pd

df = pd.DataFrame(y_test, columns=['Clothing'])

df["Pred"] = rfc.predict(x_test)

df

| Clothing | Pred | |

|---|---|---|

| 0 | 0.0 | 0.0 |

| 1 | 1.0 | 1.0 |

| 2 | 2.0 | 2.0 |

| 3 | 2.0 | 2.0 |

| 4 | 3.0 | 3.0 |

| ... | ... | ... |

| 9995 | 0.0 | 0.0 |

| 9996 | 6.0 | 6.0 |

| 9997 | 8.0 | 8.0 |

| 9998 | 8.0 | 8.0 |

| 9999 | 1.0 | 2.0 |

10000 rows × 2 columns

# Confusion Matrix

import altair as alt

alt.data_transformers.enable('default', max_rows=15000)

c = alt.Chart(df).mark_rect().encode(

x="Clothing:N",

y="Pred:N",

color = alt.Color("count()",scale=alt.Scale(scheme="plasma"))

)

c_text = alt.Chart(df).mark_text(color="white").encode(

x="Clothing:N",

y="Pred:N",

text="count()"

)

(c+c_text).properties(

height=400,

width=400

)

What did we learn from this chart?

Let’s refer to the labels from the FashionMNIST data set page on Kaggle found here: https://www.kaggle.com/datasets/zalando-research/fashionmnist

Each training and test example is assigned to one of the following labels:

0 T-shirt/top 1 Trouser 2 Pullover 3 Dress 4 Coat 5 Sandal 6 Shirt 7 Sneaker 8 Bag 9 Ankle boot

I’m going to analyse T-shirt/tops, coats,and shirts for this porton of the project as based on the graph, it seems like they get misclassified the most

T-shirt/Top

df.loc[df["Pred"] == 0, "Clothing"].value_counts(sort=False)

0.0 830

6.0 226

3.0 22

8.0 2

2.0 9

1.0 4

Name: Clothing, dtype: int64

T-shirts/Tops are most likely to be misclassified as a shirts. This makes sense because they are quite similar. The labelling of the data makes this confusing as T-shirt, tops and shirts are used interchangeably for most people (at least for me).

Coat

df.loc[df["Pred"] == 4, "Clothing"].value_counts(sort=False)

0.0 3

6.0 95

3.0 29

8.0 3

2.0 171

1.0 1

4.0 820

Name: Clothing, dtype: int64

Coats are most likely to be misclassified as a shirt and a pullover. This makes sense as the shape of a shirt and pullover are similar to Coats.

Shirts

We can infer from the previous analysis that a lot of other clothing gets misclassified as a shirt. But let’s take a look at what a shirt is most likely to get misclassified as.

df.loc[df["Pred"] == 6, "Clothing"].value_counts(sort=False)

0.0 59

3.0 15

8.0 5

2.0 46

1.0 2

4.0 46

6.0 486

Name: Clothing, dtype: int64

This is interesting because most items have counts from in between 800 to 1000 for the correct classification but shirts only has around 400 to 500 counts of the right classification(at least for the ones I ran). This is probably because shirts are misclassified so often. I think this is due to how vague the labels are. It might be better to split shirts into sub-categories like button-up shirt or blouse.

K-Nearest Neighbors Classifier#

This machine learning model calculate distances for each image to find its closest neighbours before deciding what label it belongs to. The goal of this protion is to find how many neighbors we need to produce the most accurate classifications without over or underfitting the data. Reference: https://christopherdavisuci.github.io/UCI-Math-10-W22/Week6/Week6-Wednesday.html

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import mean_squared_error, mean_absolute_error

clf2 = KNeighborsClassifier(n_neighbors=10)

clf2.fit(x_train,y_train)

KNeighborsClassifier(n_neighbors=10)

clf2.predict(x_test)

array([0., 1., 2., ..., 8., 8., 2.], dtype=float32)

x_test.shape

(10000, 784)

x_train.shape

(60000, 784)

The dataset is too large. We need to cut down the dataset so it doesnt take too long to run.

x_train2 = x_train[0:1000]

x_test2 = x_test[0:1000]

y_train2 = y_train[0:1000]

y_test2 = y_test[0:1000]

mean_absolute_error(clf2.predict(x_test2), y_test2)

0.462

mean_absolute_error(clf2.predict(x_train2), y_train2)

0.426

Now that we have found the difference in accuracy for test and train data, we are next trying to find for what n_neighbour values will produce the best results. With this, we will use a test error curve. We will create a functioin that completes the above for a range of values n_neighbours will be.

def get_scores(k):

clf2 = KNeighborsClassifier(n_neighbors=k)

clf2.fit(x_train2, y_train2)

train_error = mean_absolute_error(clf2.predict(x_train2), y_train2)

test_error = mean_absolute_error(clf2.predict(x_test2), y_test2)

return (train_error, test_error)

df_scores = pd.DataFrame({"k":range(1,100),"train_error":np.nan,"test_error":np.nan})

df_scores

| k | train_error | test_error | |

|---|---|---|---|

| 0 | 1 | NaN | NaN |

| 1 | 2 | NaN | NaN |

| 2 | 3 | NaN | NaN |

| 3 | 4 | NaN | NaN |

| 4 | 5 | NaN | NaN |

| ... | ... | ... | ... |

| 94 | 95 | NaN | NaN |

| 95 | 96 | NaN | NaN |

| 96 | 97 | NaN | NaN |

| 97 | 98 | NaN | NaN |

| 98 | 99 | NaN | NaN |

99 rows × 3 columns

%%timeit

for i in df_scores.index:

df_scores.loc[i,["train_error","test_error"]] = get_scores(df_scores.loc[i,"k"])

40 s ± 167 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

df_scores

| k | train_error | test_error | |

|---|---|---|---|

| 0 | 1 | 0.000 | 0.764 |

| 1 | 2 | 0.438 | 0.729 |

| 2 | 3 | 0.560 | 0.749 |

| 3 | 4 | 0.500 | 0.699 |

| 4 | 5 | 0.500 | 0.704 |

| ... | ... | ... | ... |

| 94 | 95 | 0.937 | 0.922 |

| 95 | 96 | 0.964 | 0.924 |

| 96 | 97 | 0.947 | 0.922 |

| 97 | 98 | 0.948 | 0.916 |

| 98 | 99 | 0.972 | 0.911 |

99 rows × 3 columns

Inverse K for more flexibility.

df_scores["kinv"] = 1/df_scores.k

df_scores

| k | train_error | test_error | kinv | |

|---|---|---|---|---|

| 0 | 1 | 0.000 | 0.764 | 1.000000 |

| 1 | 2 | 0.438 | 0.729 | 0.500000 |

| 2 | 3 | 0.560 | 0.749 | 0.333333 |

| 3 | 4 | 0.500 | 0.699 | 0.250000 |

| 4 | 5 | 0.500 | 0.704 | 0.200000 |

| ... | ... | ... | ... | ... |

| 94 | 95 | 0.937 | 0.922 | 0.010526 |

| 95 | 96 | 0.964 | 0.924 | 0.010417 |

| 96 | 97 | 0.947 | 0.922 | 0.010309 |

| 97 | 98 | 0.948 | 0.916 | 0.010204 |

| 98 | 99 | 0.972 | 0.911 | 0.010101 |

99 rows × 4 columns

ctrain_point = alt.Chart(df_scores).mark_circle().encode(

x = "kinv",

y = "train_error",

tooltip = ['kinv', 'test_error','train_error', 'k']

).interactive()

ctest_point = alt.Chart(df_scores).mark_circle(color="orange").encode(

x = "kinv",

y = "test_error",

tooltip= ['kinv', 'test_error','train_error', 'k']

).interactive()

scatter = ctrain_point+ctest_point

ctrain_line = alt.Chart(df_scores).mark_line().encode(

x = "kinv",

y = "train_error",

tooltip = ['kinv', 'test_error','train_error', 'k']

).interactive()

ctest_line = alt.Chart(df_scores).mark_line(color="orange").encode(

x = "kinv",

y = "test_error",

tooltip= ['kinv', 'test_error','train_error', 'k']

).interactive()

line = ctrain_line+ctest_line

scatter+line

What do we learn from these charts?

With high n_neighbours values, k, the model is underfitting, whereas with lower n_neighbours values,k, the model is overfitting. Because high n_neighbours values leads to more restrictions on the model, making it harder for it to classify the images. As for lower n_neighbour values, it has less restrictions, so the algorithm fits exactly to the training data, making it harder for it to predict future observations reliably.

Summary#

I used Pandas to better understand the data and plotting tools to analyse the predictions in the model. Based on the results, I drew conclusions about what the results are trying to tell us about the model and found the best restrictions for each model. From this project, I applied previous knoelwdge of Random Forest Classifier to classify the model and introduced the K-Nearest Neighbors Classifier for the ‘Extra’ portion of the project.

References#

Your code above should include references. Here is some additional space for references.

What is the source of your dataset(s)?

https://www.kaggle.com/datasets/zalando-research/fashionmnist

List any other references that you found helpful.

https://www.datacamp.com/tutorial/k-nearest-neighbor-classification-scikit-learn https://www.bmc.com/blogs/data-normalization/ https://www.w3schools.com/python/numpy/numpy_array_slicing.asp

Submission#

Using the Share button at the top right, enable Comment privileges for anyone with a link to the project. Then submit that link on Canvas.